#Databricks data lakehouse full

However, full customization is available for developers who require it. Although running on a decentralized and parallel computing platform, development at a technical level is quite straightforward, and developers don’t necessarily need to delve into the intricacies of the Spark engine. The final output of each processing pipeline is stored in the cloud, typically in the Delta Lake format, which is the de facto solution for modern data lake analytics. Generally, a single data processing pipeline comprises several sequentially run Notebooks, each focusing on a specific type of processing, such as raw data cleaning, key generation, or dimensional model generation.

#Databricks data lakehouse code

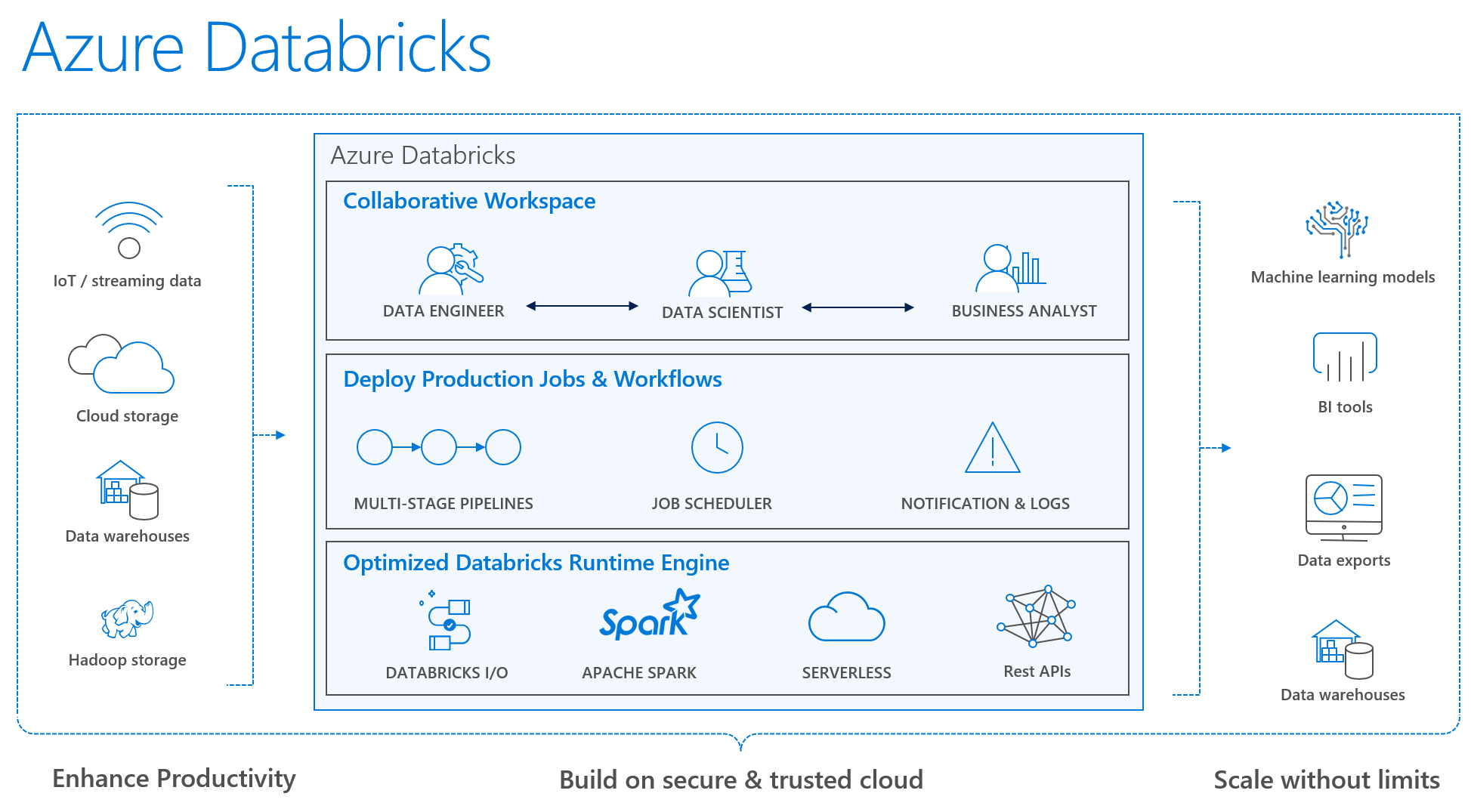

Different languages can be used within a single notebook, with code (e.g., Python or SQL) written in individual command cells. Pipeline development takes place in Databricks Notebooks, which consist of one or more command cells executed sequentially. Dataframe processing occurs within the cluster’s memory and is manipulated using Python, Scala, R, or SQL in data processing pipelines. Data processing with Databricksĭuring data processing, Spark Dataframes, which resemble database tables, serve as the typical unit of processing and are managed by the Spark engine. The total cost of ownership combines data and compute resources, allowing both to scale independently. Data is stored in the cloud but is separated into the customer’s own data storage solutions, such as Azure Data Lake Storage Gen2 or AWS S3. Users can determine the types of clusters required for computation, while Databricks procures the necessary machines, activates them as needed or according to a predetermined schedule, and shuts them down when no longer required. Under the hood, Databricks relies on decentralized, parallel computing powered by Apache Spark. Databricks is available on all major public cloud platforms ( Azure, AWS, and GCP) and seamlessly integrates with their storage, security, and compute infrastructure while offering administration capabilities to users. With its roots in the Big Data world, Databricks is particularly adept at handling large volumes of data, which typically involves cloud storage. The platform combines the best elements of data lakes and data warehouses, providing tools for data engineers, data scientists, and business intelligence analysts to collaboratively develop solutions ranging from small-scale, highly customized data needs to enterprise-level data platform solutions. Their flagship product, the Databricks Lakehouse Platform, is a cloud-based data and analytics platform powered by Spark and Delta Lake.

#Databricks data lakehouse software

Databricks, a US-based software company, was founded by the creators of Apache Spark, an open-source analytics engine.

0 kommentar(er)

0 kommentar(er)